Autonomous Golf Cart Testbed

Motivation

In the following, we illustrate how a standard electric golf car was converted to a fully autonomous vehicle, which can be used as a testbed for developing self-driving and deep learning algorithms.

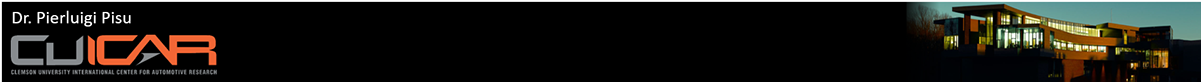

In order to perform autonomous functions, the vehicle is equipped with a set of sensors including a Lidar, GPS, IMUs and stereo cameras. The standard DC motor was substituted with an AC induction motor, thus allowing regenerative braking and minimizing the need of mechanical brakes.

The hierarchical vehicle control structure consists of two levels: the highest level of control is allocated to an NVIDIA Drive PX2 that utilizes sensors readings to generate a suitable path for the golf car to safely navigate the environment, while the low level control resides on a dSPACE Microautobox. A CAN bus allows for exchanging communication between the controllers and the actuators ECUs. The vehicle can be used to test algorithms that range from lane keeping, to obstacle detection and avoidance, to autonomous parking. Deep learning techniques can also be investigated in the context of pedestrian, traffic sign and vehicle detection. On the long term, the same vehicle will be used to investigate more advanced path planning techniques, and self-driving features in general.

Golf Car Testbed Architecture

The golf car testbed architecture consists of different different devices that communicate between each other, by using standard communication protocol and a standard framework:

- Controlled Area Network (CAN Bus): This communication protocol is used for the low level communication so that control messages (e.g. torque, velocity) can be exchanged among different devices.

- Robot Operating System (ROS): It is a flexible robotic framework that permits the usage of different tools, libraries and conventions that aim to simply the creation of complex and robust behavior across a variety of robotic platforms. This framework is used at the higher level, where the information coming from IMU, lidar and camera have to be analyzed.

ROS Framework

ROS accelerates software development and simplifies software redistribution as it includes an integrated framework and tool-sets for robotics development, therefore making ROS a #1 candidate for researchers and developers working on robotics and innovation [1].

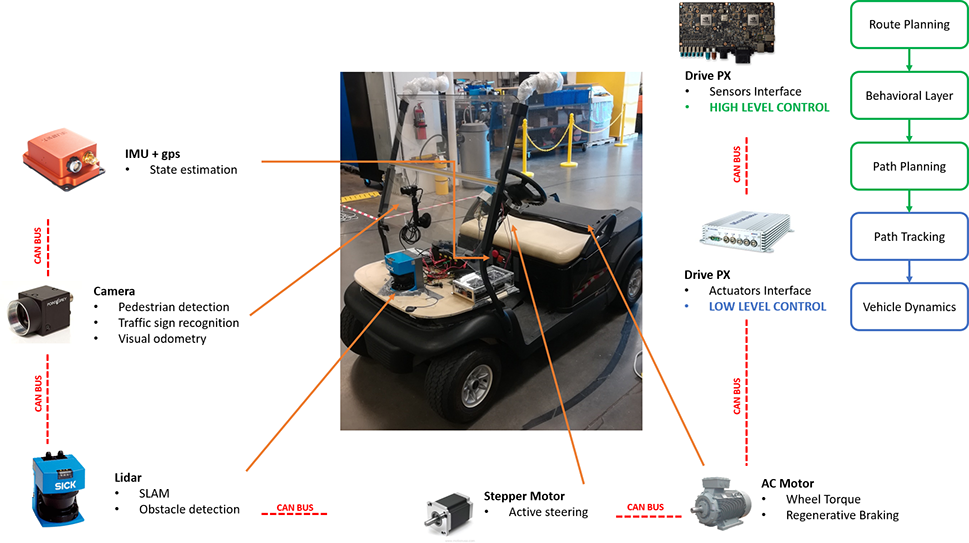

ROS is designed to be a loosely coupled system where a process is called a node and every node should be responsible for one task. Nodes communicate with each other using messages passing via logical channels called topics. Each node can send or get data from the other node using the publish/subscribe model [1].

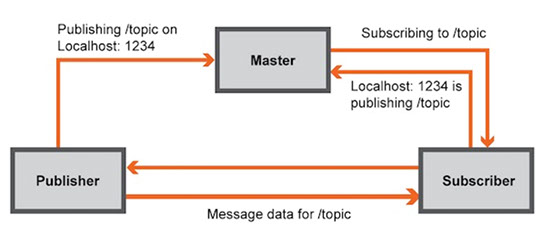

For the golf car testbed a centralized structure has been chosen in which the Nvidia Drive Px2 is used to manage all the communications between devices the decision algorithms (e.g. Path Planning, Path Tracking), as represented below.

Autonomous Algorithm

In the development of a self-driving vehicle it is important to differentiate indoor and outdoor navigation.

Indoor Navigation:

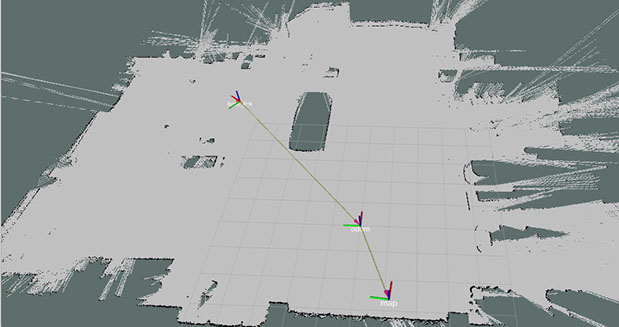

For the indoor navigation, a SLAM approach is used where a map of an unknown environment is constructed (Fig. 4) and updated while simultaneously keeping track of an agent’s location in it. The most common algorithm used are based on statistical techniques that provide an estimation of the posterior probability function for the pose of the robot and for the parameters of the map [2]. In figure below the golf cart navigation in a indoor environment is presented. In this case the transformation matrices have been computed by using both an Unscented Kalman Filter (UKF) and an adaptive Monte Carlo localization approach (AMCL).

Outdoor Navigation:

Environment perception is a fundamental function to enable autonomous vehicles, which provides the vehicle with crucial information on the driving environment, including the free drivable areas and surrounding obstacles’ locations, velocities, and even predictions of their future states. The environment perception task can be tackled by using different sensors, e.g. LIDARs, radars, cameras, and fusion algorithms.

Some preliminary studies were conducted using this testbed as illustrated in the following:

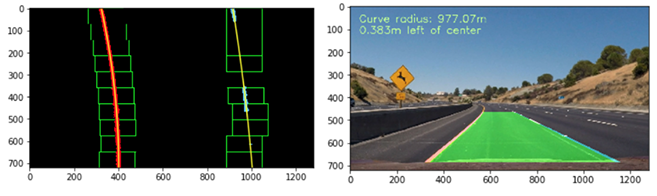

- Line Detection: as represented in Fig. 5 a sliding window approach is used to identify lane pixels, while the curvature is estimated through a polynomial fitting.

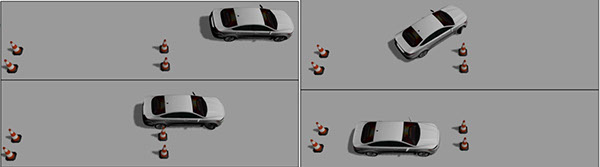

- Parking Maneuver: path planning is achieved using a continuous planner by using the Hybrid State A* algorithm. Kinematically feasible paths are generated from the start location to the available parking spot (goal). A decoupled pure pursuit and PID controller architecture is used to traverse the trajectory with a target velocity.

Video - Parallel parking

Video - Lane keeping

[1] http://www.ros.org/

[2] https://en.wikipedia.org/wiki/Simultaneous_localization_and_mapping