Rigid and Non-Rigid Classification Using Interactive Perception

Bryan Willimon, Stan Birchfield, and Ian Walker

Abstract

Robotics research tends to focus upon either noncontact

sensing or machine manipulation, but not both. This

research explores the benefits of combining the two by addressing

the problem of classifying unknown objects, such as found in

service robot applications. In the proposed approach, an object

lies on a flat background, and the goal of the robot is to interact

with and classify each object so that it can be studied further.

The algorithm considers each object to be classified using color,

shape, and flexibility. Experiments on a number of different

objects demonstrate the ability of efficiently classifying and

labeling each item through interaction.

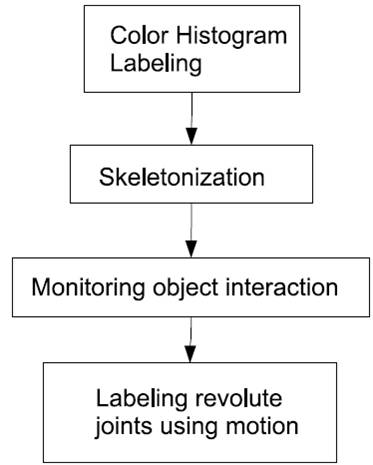

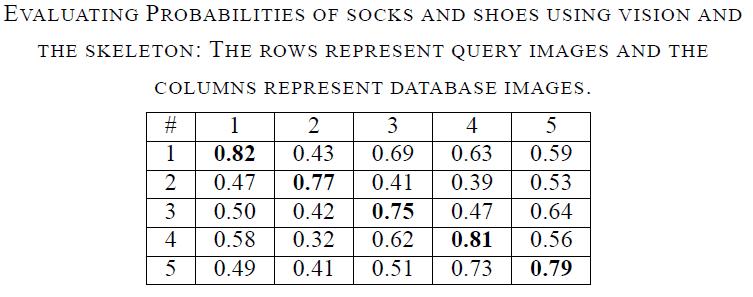

Algorithm

|

|

The purpose of this work is to automatically learn the

properties of an object for the purpose of classification

and future manipulation. The figure to the left presents an overview of

our classification process. First, the object is located in the

image, and a color histogram model is captured in

order to model the object. Then, a 2D skeleton of the object

is determined using a standard image-based skeletonization

algorithm. The robotic arm then interacts with the object

by prodding it from different directions. By monitoring the

object's response to these movements, the revolute joints of

the object are computed, as well as potential grasp points.

We focus in this work on revolute joints because they are

common in everyday situations (e.g., stapler, scissors, pliers,

hedge trimmers, etc.) and because they more closely model

the behavior of non-rigid objects containing stiffness (e.g.,

stuffed animals).

|

Experimental Results

The proposed approach was applied in a number of

different scenarios to test its ability to perform practical

interactive perception. A PUMA 500 robotic arm was used

to interact with the objects, which rested upon a flat table

with uniform appearance. The objects themselves and their

type were unknown to the system. The entire system, from

image input to manipulation to classification, is automatic.

|

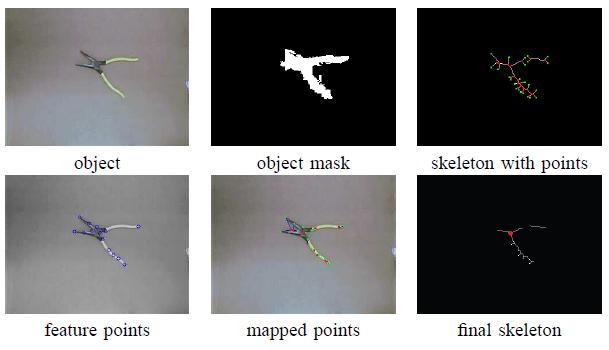

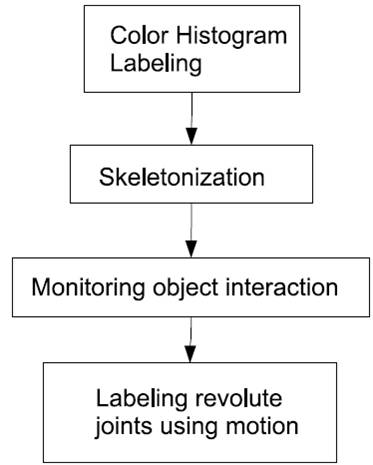

Articulated Rigid Object |

|

Example of our approach on a pair of pliers. In lexicographic order:

The original image of the object, the binary mask of the object, the skeleton with the intersection points (red dots)

and end points (green dots) labeled, the feature points gathered from the object, the image after mapping the feature

points to the intersection points, and the final skeleton with the revolute joint (red point) automatically labeled.

The red dots represent the intersection points (possible revolute joints) of the skeleton. The green dots represent

the end points (interaction points) of the skeleton.

|

|

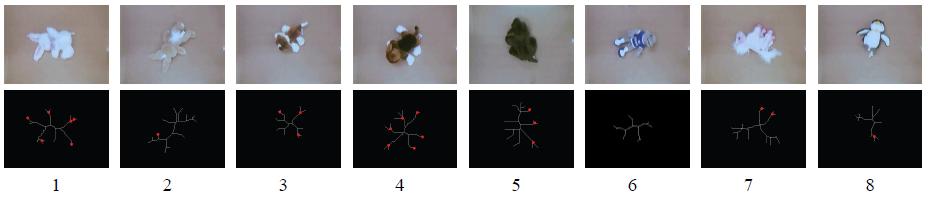

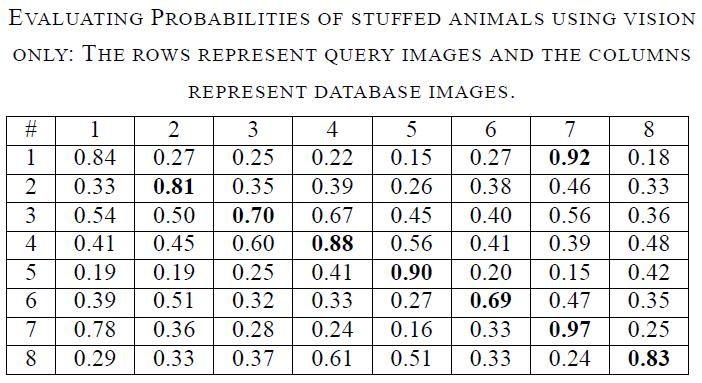

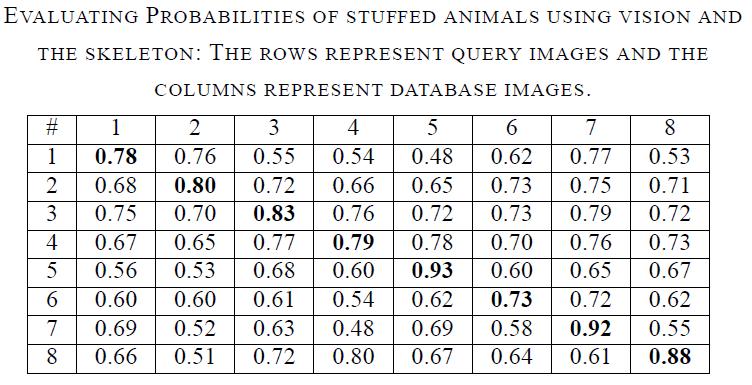

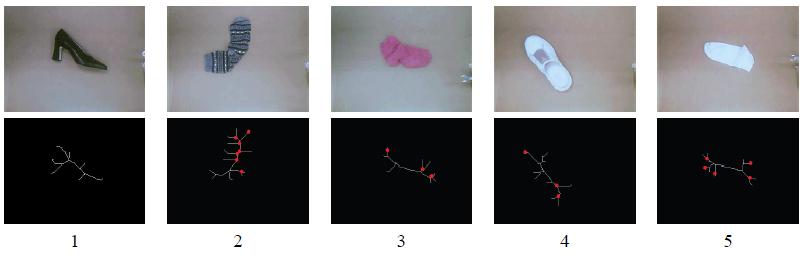

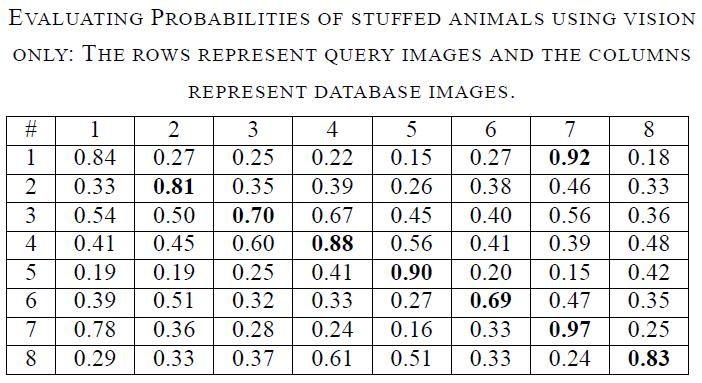

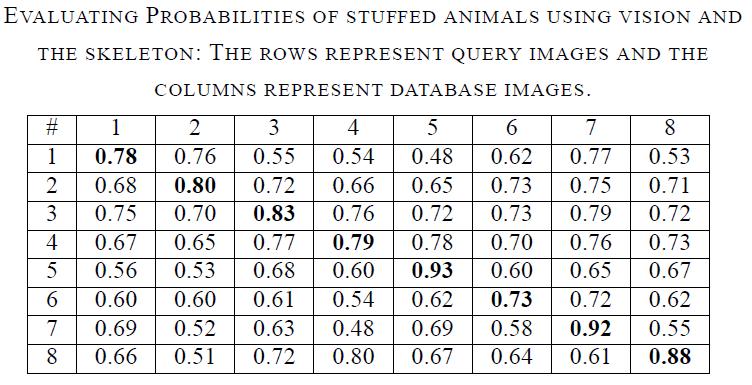

Classification Experiment: Stuffed Animals |

|

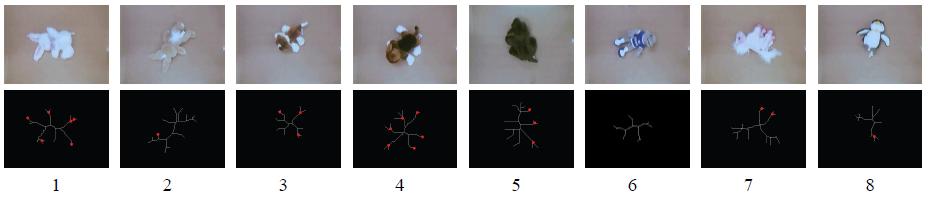

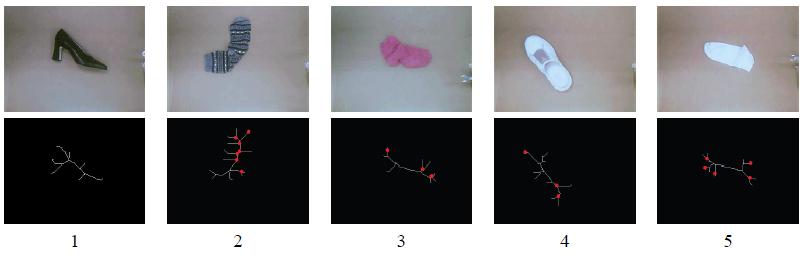

TOP: Images of the individual objects used for creating a database of

previously encountered items. BOTTOM: The final skeletons of the objects with revolute joints automatically

labeled (red dots).

|

|

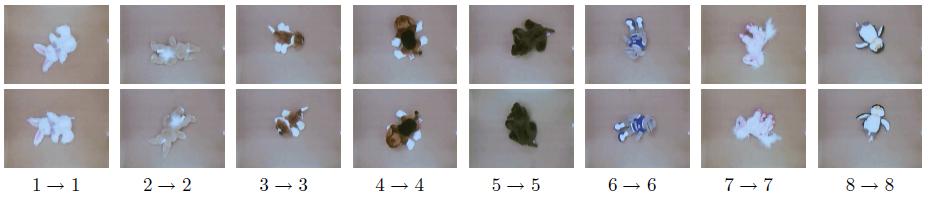

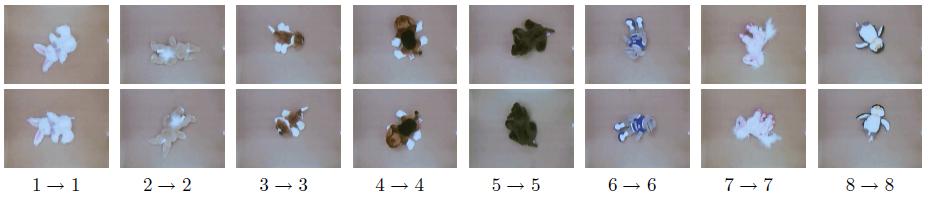

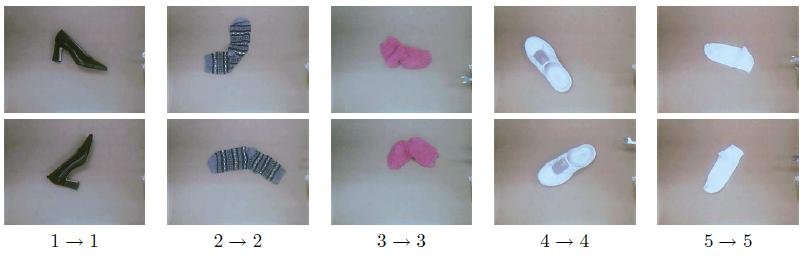

Results from matching query images obtained during a second run of the

system (top) with database images gathered during the first run (bottom). The numbers indicate the ground truth identity

of the object and the matched identity. All of the matches are correct. |

|

|

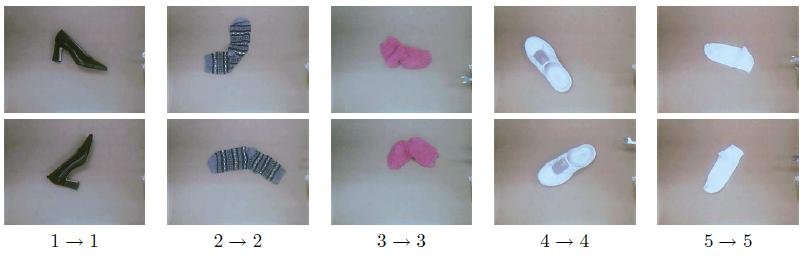

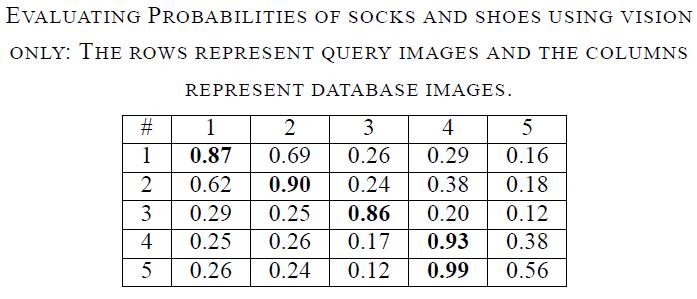

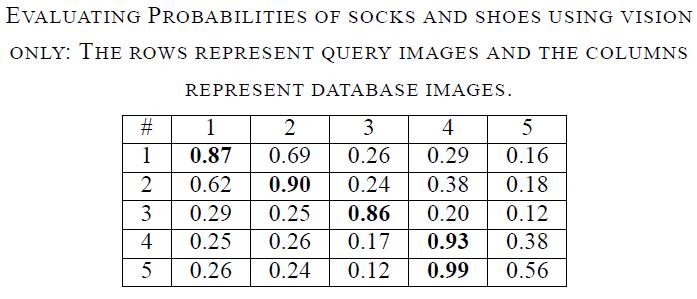

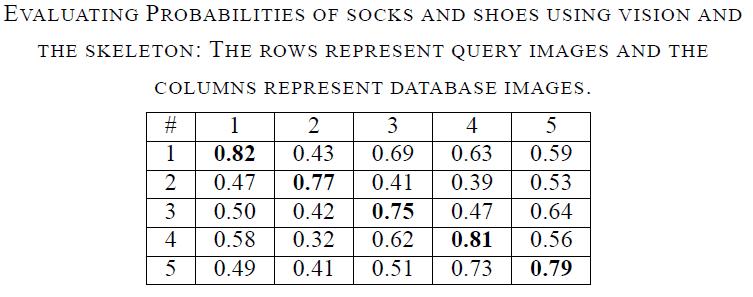

Classification Experiment: Socks and Shoes |

|

TOP: Images of the individual objects gathered automatically by

the system for the purpose of creating a database of objects previously encountered. BOTTOM: The final skeletons

with revolute joints labeled.

|

|

Results from matching query images obtained during a second run of

the system (top) with database images gathered during the first run (bottom) for the sorting experiment. There is

one mistake.

|

|

|

Publications

Acknowledgements

This research was supported by the U.S. National Science

Foundation under grants IIS-1017007, IIS-0844954, and IIS-0904116.